Smithsonian Learning Lab: Search

A web analytics study to analyze user search behaviors and improve search results.

My Role

UX Researcher. Focused on search terms, “Refine Search” filters, methods for searching by specific museums, and filling content gaps to meet user needs.

Methods

Web analytics

Tools

Google Analytics UA, Google Data Studio, Google Sheets

Team

Allysha Leonard, Jesse Ludington, Arthi Sundararajan, and Wenjing Wu @ Pratt Center for Digital Experiences

Timeline

6 weeks

(Mar.–May 2022)

Our Project

The Smithsonian Learning Lab is a free platform for discovering millions of digital resources (objects, artwork, photographs, text, audio, video, and more) from across the Smithsonian, creating personalized interactive learning experiences (“collections”), and sharing in the Smithsonian’s expansive community of knowledge and learning. It is designed for PreK–12 teachers and students but is available for anyone to use. The team behind the Lab, the Smithsonian Office of Educational Technology (OET), leads the Smithsonian in increasing digital access to museum resources for PreK–12 education.

Our goals

Faced with the increasing number of users and user-created interactive experiences on the platform, OET wants to understand how users interact with the Lab’s primary feature: search.

Our research focuses on two guiding questions:

Search Behavior:

How are users using the

Lab’s search?

Search Results:

Are users finding the content

they’re looking for?

Answering these guiding questions will help OET better understand what users are looking for, how to improve the Lab’s search interface, how to support Smithsonian educators in building new educational content to fill gaps, and how to improve user supports for creating and publishing collections that are easily discoverable by others.

My focus

During this project, in addition to my role as a Pratt student I was also a Digital Content Producer at OET. My analytics research for this project focuses on understanding patterns in search terms, how users are using Refine Search filters, how users search for specific museums, and how software improvements and Smithsonian-created educational content can fill existing gaps and meet user needs.

Timeline

Client Kick-off Meeting

March 23, 2022

➜

Data Analysis

➜

Development of Findings and Recommendations

➜

Client Presentation

May 4, 2022

Our Methodology

Data Source

The data was pulled from Google Analytics UA for January 1, 2020 to April 1, 2022. This period was chosen to capture data from the influx of users at the beginning of the COVID-19 pandemic as educators and caregivers sought remote learning resources.

Data Tools

I used Google Data Studio and Google Sheets as analysis and visualization tools for data pulled from Google Analytics UA, while other teammates also used Microsoft Excel and Tableau. Google Data Studio enabled data visualization in more complex ways than Google Analytics dashboards could provide. Google Sheets enabled the quick organization of data and the development of flexible analysis charts.

Limitations

Our research was limited by:

Lack of event tracking for search on Google Analytics.

Lack of search filter tracking, outside of Subject Filters, as search terms on Google Analytics.

Lack of insight into user motivations through lack of qualitative data; dividing users into those who are exploratory and those who are results-driven is an assumption made based on search behavior.

Lack of insight into end-use of content through lack of qualitative data; we do not have insight into whether sessions with search are based on independent use or teaching in the classroom.

Metrics

Metrics studied include:

Users

Pageviews

Unique pageviews

Exit pages

Session duration

Landing pages

Source

Sessions with search

Unique searches

Time after search

Average search depth

Search terms

Search filters

Search refinements

Search exits

Findings

View the full findings in our final evaluation report and presentation. The following summarizes the key findings I developed as a team researcher.

1: Searches related to heritage months, followed closely by subject areas, are the most popular.

Searches related to heritage months include “black history,” “hispanic heritage,” “asian pacific american history,” “american indian heritage,” and “women’s history.” Searches for subject areas are highly varied and include both broad subjects (ie. science) and more specific subjects (ie. chemistry).

2: Words related to social studies and art topics are the most frequently searched.

Top social studies terms include those connected to America, ancient civilizations, African Americans, and civil rights issues.

3: More users are hand-typing museum names than using the Lab’s existing filters.

This may be influenced by the fact that these filters are only available in a resource search – not a search for collections.

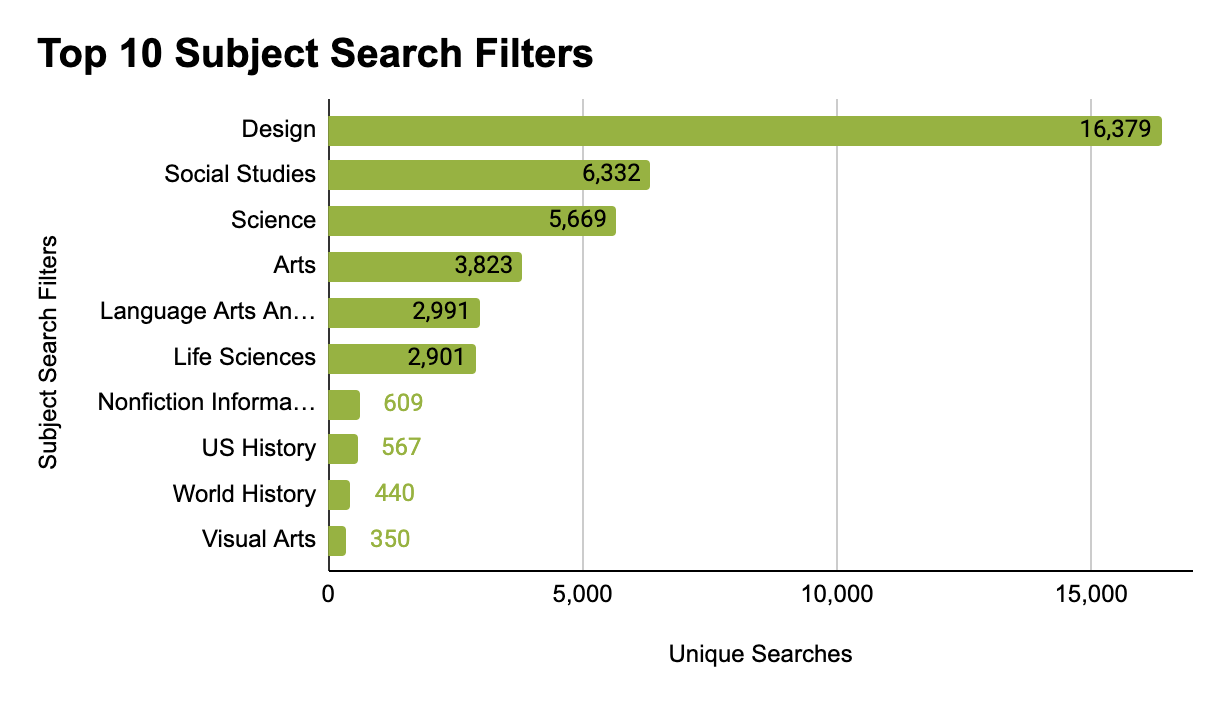

4: The most popular subject filter is Design.

The popularity of the following filters – Social Studies, Science, Arts, and Language Arts, and English – may at least be partially attributed to them being highlighted and linked on the Lab’s home page.

Finding 5: The most popular age level filter is Elementary, followed by Primary.

The popularity of filters does not align with the total amount of collections available in the Lab.

Recommendations

View the full recommendations in our final evaluation report and presentation. The following summarizes the key recommendations I developed as a team researcher.

1: Build a controlled vocabulary into the Keywords tool based on the most common search terms.

Screenshot of the Smithsonian Learning Lab’s current Keywords tool.

This tool is currently an unrestricted text input field, and this change will increase the recall and precision rates of collection searches by regulating the terms used to tag collections, ensuring that collections are tagged with the most common words users search with, prevent tagging typos, and provide collection-creators with a starting place to brainstorm keywords.

2: Develop a call for content from Smithsonian educators to enable the creation of new educational resources based on user needs.

A preview of preliminary focus areas based on findings from this report’s analysis.

This call for content should be based on findings for search result accuracy and top search terms, categories, words, and filters. A full table of recommended focus areas is included in the evaluation report.

3: Make users and groups marked as white-listed in the Lab’s administrative back-end searchable in the Lab.

A mockup of a search for Coopher Hewitt, Smithsonian Design Museum collections.

This will support users looking for collections by searching for the museum or user’s name. The previously recommended controlled vocabulary may support this solution; consider pre-populating the keyword fields in collections published by white-listed users or groups with their name.

Key Takeaways

We met with OET leadership in a final meeting to present our key recommendations and findings, deliver the full analytics report, and share the final Google Data Studio dashboard we developed to continuously track search analytics data on a quarterly basis.

After our presentation, we met with our client to discuss our findings in detail. Overall, OET leadership was very pleased with the report and greatly appreciated the actionable nature of our recommendations. As a member of OET, I can say that we are seriously reflecting on findings and considering recommendation implementation, and we plan to have our first internal presentation of the report and discussion of next steps in late May.

A team with diverse background experiences is a major asset.

Our team came into the project with incredibly varied backgrounds and interests, ranging from writing, database development, graphic design, search platform development, user experience design, anthropology, and museum education. Being able to learn from each other’s diverse experiences as we discussed findings and brainstormed potential solutions made our recommendations much stronger than they would have been with a more homogenous team.

Don’t be afraid to recommend drastic changes.

As a member of the OET team, fresh perspectives shake things up for staff who have been working on the same platform for years. As long as drastic recommendations are based on findings from thoughtful data analysis, they always help push a team to look at their platform differently.